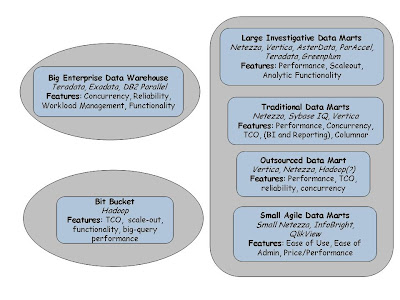

Curt Monash has a great post describing eight kinds of analytic DBMSes. Here's a picture that presents a simplified view, but I highly recommend the original post. There are three major groups: the big enterprise data warehouses (EDWs), various kinds of data marts, and what he calls the "bit bucket" category -- which is where he places Hadoop. I've left out the "archival store" and the "operational analytic server" which was essentially a catch-all category.

Let's look at Hadoop and related technologies -- Monash argues that this is the "big bit bucket" category. A place to gather large amounts of data cheaply and do some analytics .This is the kind of data that you'd normally consider too low-value to justify putting it inside your gold-plated EDW. As Hadoop gets better at TCO, performance, and adds better support for reporting, high-level analytics, and workload management -- how is it going to affect the other spaces? I've argued before that Hadoop is not going to be the next platform for EDWs for a long time to come.

One way to look at an improved Hadoop is as a bigger, more reliable, lower cost player in the "large investigative data mart" space. The investigative analytics aspect is likely to be Hadoop's major advantage along with low cost. Cheaper storage, better programmability, great fault-tolerance, and eventually reasonably good efficiency is going to make for a very interesting entry in the "large investigative data mart" space.

Traditional data marts are really good at being a data mart! Hadoop is a long way off from competing with those -- but there is probably a segment of this market that values elasticity and low-cost. My guess is startups like Platfora are going to target this space. I suspect very high scale marts that are not very demanding when it comes to concurrency and performance will very soon be better served by Hadoop-based solutions.

The unlikely category of "outsourced data marts" is actually quite promising. As workload management improves, it might be possible to consolidate large outsourced marts for reporting on Hadoop. Individual marts that are > 20PB are unlikely to be a large enough market (at least, not in the short term), but businesses that offer analytics on large datasets -- advertising tracking, clickstream analytics for customer modeling, website optimization, etc -- they will probably want a highly scalable, elastic, multi-tenant, low-cost mart.

Thursday, November 17, 2011

Tuesday, November 15, 2011

Big Data and Hardware Trends

One of the common refrains from "real" data warehouse folks when it comes to data processing on Hadoop is the assumption that a system built in Java is going to be extremely inefficient for many tasks. This is quite true today. However, for managing large datasets, I wonder if that's the right way to look at it. CPU cycles available per dollar is decreasing more rapidly than sequential bandwidth available per dollar. Dave Patterson observed this a long time ago. He noted that the annual improvement rate for CPU cycles is about 1.5x and the improvement rate for disk bandwidth was about 1.3x ... and there seems to be evidence that the disk bandwidth improvements are tapering off.

While SSDs remain expensive for sheer capacity, disks will continue to store most of the petabytes of "big data". A standard 2U server can only hold so many disks .. you max out at about 16 2.5" disks or about 8-10 3.5" disks. This gives us about 1500MB/s of aggregate sequential bandwidth. You can stuff 48 cores into a 2U server today. This number will probably be around 200 cores in 3-4 years. Even with improvements in disk bandwidth, I'd be surprised if we could get more than 2000MB/s in 3-4 years using disks. In the meantime, the JVMs will likely close the gap in CPU efficiency. In other words, the penalty for being in Java and therefore CPU inefficient is likely to get progressively smaller.

There are lots of assumptions in the arguments above, but if they line up, it means that Hadoop-based data management solutions might start to get competitive with current SQL engines for simple workloads on very large datasets.The Tenzing folks were claiming that this is already the case with a C/C++ based MapReduce engine.

While SSDs remain expensive for sheer capacity, disks will continue to store most of the petabytes of "big data". A standard 2U server can only hold so many disks .. you max out at about 16 2.5" disks or about 8-10 3.5" disks. This gives us about 1500MB/s of aggregate sequential bandwidth. You can stuff 48 cores into a 2U server today. This number will probably be around 200 cores in 3-4 years. Even with improvements in disk bandwidth, I'd be surprised if we could get more than 2000MB/s in 3-4 years using disks. In the meantime, the JVMs will likely close the gap in CPU efficiency. In other words, the penalty for being in Java and therefore CPU inefficient is likely to get progressively smaller.

There are lots of assumptions in the arguments above, but if they line up, it means that Hadoop-based data management solutions might start to get competitive with current SQL engines for simple workloads on very large datasets.The Tenzing folks were claiming that this is already the case with a C/C++ based MapReduce engine.

Subscribe to:

Comments (Atom)