I've been learning about stochastic gradient descent (SGD) and why people seem to be excited about it in the context of building models over large datasets. Rainer Gemulla, Peter Haas, Erik Nijcamp, and Yannis Sismanis published a really cool paper at KDD this year that was my introduction to the problem.

At a high level. SGD is simply an optimization technique that can be used to minimize some objective function (such as a loss function). The main difference from traditional "batch" gradient descent is that SGD samples from the data at each step. That is, the model can be updated after evaluating the gradient of the loss function for a sample of the input data -- a sample that can be as small as a single point! SGD can achieve incredibly fast performance on a variety of machine learning tasks -- and often converges to a good solution much faster than conventional batch gradient descent. On large data sets, the performance difference could be an order of magnitude or more. There are, of course, lots of caveats about data size, learning rates, sampling techniques, and convergence rates, when it comes to SGD.

The KDD paper focuses on matrix factorization -- a technique that has received a lot of interest in the context of the "Netflix Problem". Given a sparse C by I matrix M of C customers and I items (say movies), where each entry in the matrix contains a rating, the problem is one of finding factors of the matrix A , B such that the matrix M' = A x B matches the existing values in M, and offers a prediction for the missing values in M. There are many techniques for solving this problem. However, as the matrix M gets larger, and you have billions of ratings from millions of customers, the problem becomes too big to solve on a single machine.

Rainer's KDD paper shows how you can use a distributed version of SGD to solve this problem using a cluster of machines. The central idea in the paper is a clever partitioning of the matrix so that each node in the cluster can work on a partition of the data and update the model (the factors A and B) without fine grained coordination with other nodes in the cluster. The bulk of the paper deals with proving why their partitioning strategy is statistically sound. The paper describes an implementation of this using R and Snowfall for small clusters. They also describe an implementation of this algorithm using Hadoop. The details of the implementation are tricky and complicated -- you need to achieve a certain partitioning of the data, there are certain communication patterns on the model that are not a natural fit for Hadoop resulting in some inelegant MapReduce code, and a certain amount of additional coordination is required for efficient performance. However, the results are impressive -- the Distributed SGD algorithm converges to a good solution faster and scales way better than alternative techniques.

As one can imagine, Netflix isn't the only company that needs to solve large recommendation problems. There are many large content publishers, marketplaces, and retailers working hard on good solutions to this problem. What's more, the ability to factorize large matrices is good not just for content recommendation. It can be used as a building block for clustering algorithms, topic detection, and even risk analytics. Neat stuff. This should enable some interesting new recommendation-style applications and provide alternate/faster implementations for existing ones.

Tuesday, September 20, 2011

Thursday, September 15, 2011

New Ideas In Datacenter Networking

I've recently been stumbling into interesting papers on new ways to wire up datacenters to guarantee properties like high bisection bandwidth without the need for expensive networking hardware like big second level switches. I don't normally read a lot about networking but some of the stuff I've been working on lately has given me a peek into the SIGCOMM, NSDI world ...

CamCube, BCube, and DCell all seem to be exploring ways of giving up the big expensive switch at the top of a tree-structured network in favor of multiple NICs on each server and/or multiple smaller switches connected in interesting topologies. I suspect this is still very early stage stuff, and many problems such as cabling, servicability, availability NIC-to-NIC transfer without burning CPU etc need to be solved before it becomes interesting to a practitioner. But the papers do offer an intriguing read for someone who normally follows the database and systems communities.

In the Hadoop/MapReduce context, this could change how we think about scheduling tasks, scheduling transfers when multiple MapReduce jobs are running. One of the papers actually talks about how Hadoop workloads could be affected by these new topologies. One criticism against these approaches in the context of Hadoop clusters is that they probably only get interesting for mid-to-large clusters. Monash reports that the median Hadoop cluster is about 30 nodes and that the average is about 200 nodes (numbers courtesy Omer Trajman of Cloudera). At these sizes, there are cheaper and easier ways to wire up a Hadoop cluster. Fun papers nevertheless!

CamCube, BCube, and DCell all seem to be exploring ways of giving up the big expensive switch at the top of a tree-structured network in favor of multiple NICs on each server and/or multiple smaller switches connected in interesting topologies. I suspect this is still very early stage stuff, and many problems such as cabling, servicability, availability NIC-to-NIC transfer without burning CPU etc need to be solved before it becomes interesting to a practitioner. But the papers do offer an intriguing read for someone who normally follows the database and systems communities.

In the Hadoop/MapReduce context, this could change how we think about scheduling tasks, scheduling transfers when multiple MapReduce jobs are running. One of the papers actually talks about how Hadoop workloads could be affected by these new topologies. One criticism against these approaches in the context of Hadoop clusters is that they probably only get interesting for mid-to-large clusters. Monash reports that the median Hadoop cluster is about 30 nodes and that the average is about 200 nodes (numbers courtesy Omer Trajman of Cloudera). At these sizes, there are cheaper and easier ways to wire up a Hadoop cluster. Fun papers nevertheless!

Thursday, September 8, 2011

Spinnaker VLDB Slides

[edit] Here's the full presentation I used at VLDB:

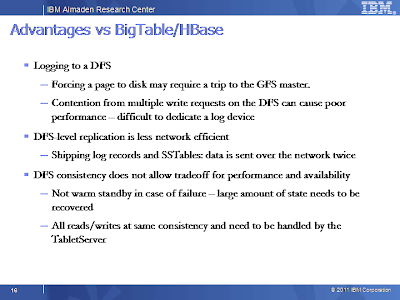

Here's a subset of the slides I used at VLDB comparing Spinnaker with Bigtable, Dynamo, and PNUTS:

Spinnaker VLDB 2011

View more presentations from sandeep_tata

Here's a subset of the slides I used at VLDB comparing Spinnaker with Bigtable, Dynamo, and PNUTS:

Sunday, September 4, 2011

VLDB 2011

I was at VLDB last week. I got to see a bunch of interesting talks and had several fun hallway conversations

Here are some notes from the conference:

Challenges and Vision Track

The Challenges and Vision track was probably the most fun. Two talks stood out to me (although I didn't attend all the talks in this session). Magdalena Balazinska's talk on Data Markets and Peter Haas' "Data is Dead -- Without What If Models". Magda's argument was that public clouds are turning out to be a very convenient place to buy and sell data and research is required to understand how data and data services can be priced and sold. She argued that the current tiered subscription models don't really cut it (I'm not sure I fully buy it, but it is an interesting proposition). She argued that this brings about many challenges at the intersection of databases and economics.

Peter Haas (who also works at IBM Almaden) argued that the database community has traditionally been good at descriptive statistics and shallow models, but will need to get good at supporting deep, predictive models to really unlock the value of all the data we're gathering. He gave examples from the healthcare world and the weather simulation world on how deeper models help us ask harder "what-if" questions. In effect he was challenging the data management community to reinvent itself as the "data and model management" community.

Traditional Database Research

Thomas Neumann of MPI gave a fun talk about compiled query processing for modern architectures.He argued that giving compilers a fighting chance to optimize your query plan after generating it in C++ (or LLVM) can be really good for query performance. In fact, with modern architectures, this might be required to get much better performance than the iterator pipelining model used in many commercial runtimes. Much of this builds on the observations that the MonetDB guys have made in the context of columnar processing techniques and the overheads of row-oriented operator pipelining-based runtimes, I expect this will be a good paper to read.

RemusDB, the best paper award winner, was an interesting talk that showed how VM-replication now performs well enough to be a way to provide High-Availability for any arbitrary database implementation. While this approach does make for a quick-and-dirty HA solution that deals with the primary failing, I'm not sure a VM level solution would work when the failed primary restarts and wants to rejoin the master-slave pair. Nevertheless, this will probably make a fun read for anyone interested in database systems.

HyPer -- a demo from some of the guys at TU-Munich , HYRISE -- from HPI and several others in academia have been playing with ideas for main-memory systems that can provide good OLTP performance while also supporting a reasonable OLAP workload. Their argument is that in most cases, you don't really need to have two separate systems -- one for OLTP and one for OLAP. I guess it is back to "one-size fits all" now eh?

There was also a cool paper on serializable snaphot isolation for replicated databases optimized for high update rates from folks at the University of Sydney and Seoul National University.

Industrial Talks

Google described Tenzing a SQL engine on MapReduce. The interesting part was that they worked with the MapReduce team quite a bit to make it possible to implement reasonably efficient join plans. The speaker flashed some slides that seemed to indicate that the per-node performance of Tenzing was "comparable" to a commercial database.

Ben Reed talked about "Inspector Gadget" -- a debugging tool for Pig that is currently in use at Yahoo! and has been open sourced as part of Pig 0.9.

Other Stuff

There were a bunch of interesting efforts around Hadoop and MapReduce that I'll write about in more detail later. There seemed to be some interest at the intersection of crowdsourcing and databases -- I'm still not sure I understand the opportunities there.

Here are some notes from the conference:

Challenges and Vision Track

The Challenges and Vision track was probably the most fun. Two talks stood out to me (although I didn't attend all the talks in this session). Magdalena Balazinska's talk on Data Markets and Peter Haas' "Data is Dead -- Without What If Models". Magda's argument was that public clouds are turning out to be a very convenient place to buy and sell data and research is required to understand how data and data services can be priced and sold. She argued that the current tiered subscription models don't really cut it (I'm not sure I fully buy it, but it is an interesting proposition). She argued that this brings about many challenges at the intersection of databases and economics.

Peter Haas (who also works at IBM Almaden) argued that the database community has traditionally been good at descriptive statistics and shallow models, but will need to get good at supporting deep, predictive models to really unlock the value of all the data we're gathering. He gave examples from the healthcare world and the weather simulation world on how deeper models help us ask harder "what-if" questions. In effect he was challenging the data management community to reinvent itself as the "data and model management" community.

Traditional Database Research

Thomas Neumann of MPI gave a fun talk about compiled query processing for modern architectures.He argued that giving compilers a fighting chance to optimize your query plan after generating it in C++ (or LLVM) can be really good for query performance. In fact, with modern architectures, this might be required to get much better performance than the iterator pipelining model used in many commercial runtimes. Much of this builds on the observations that the MonetDB guys have made in the context of columnar processing techniques and the overheads of row-oriented operator pipelining-based runtimes, I expect this will be a good paper to read.

RemusDB, the best paper award winner, was an interesting talk that showed how VM-replication now performs well enough to be a way to provide High-Availability for any arbitrary database implementation. While this approach does make for a quick-and-dirty HA solution that deals with the primary failing, I'm not sure a VM level solution would work when the failed primary restarts and wants to rejoin the master-slave pair. Nevertheless, this will probably make a fun read for anyone interested in database systems.

HyPer -- a demo from some of the guys at TU-Munich , HYRISE -- from HPI and several others in academia have been playing with ideas for main-memory systems that can provide good OLTP performance while also supporting a reasonable OLAP workload. Their argument is that in most cases, you don't really need to have two separate systems -- one for OLTP and one for OLAP. I guess it is back to "one-size fits all" now eh?

There was also a cool paper on serializable snaphot isolation for replicated databases optimized for high update rates from folks at the University of Sydney and Seoul National University.

Industrial Talks

Google described Tenzing a SQL engine on MapReduce. The interesting part was that they worked with the MapReduce team quite a bit to make it possible to implement reasonably efficient join plans. The speaker flashed some slides that seemed to indicate that the per-node performance of Tenzing was "comparable" to a commercial database.

Ben Reed talked about "Inspector Gadget" -- a debugging tool for Pig that is currently in use at Yahoo! and has been open sourced as part of Pig 0.9.

Other Stuff

There were a bunch of interesting efforts around Hadoop and MapReduce that I'll write about in more detail later. There seemed to be some interest at the intersection of crowdsourcing and databases -- I'm still not sure I understand the opportunities there.

Subscribe to:

Posts (Atom)